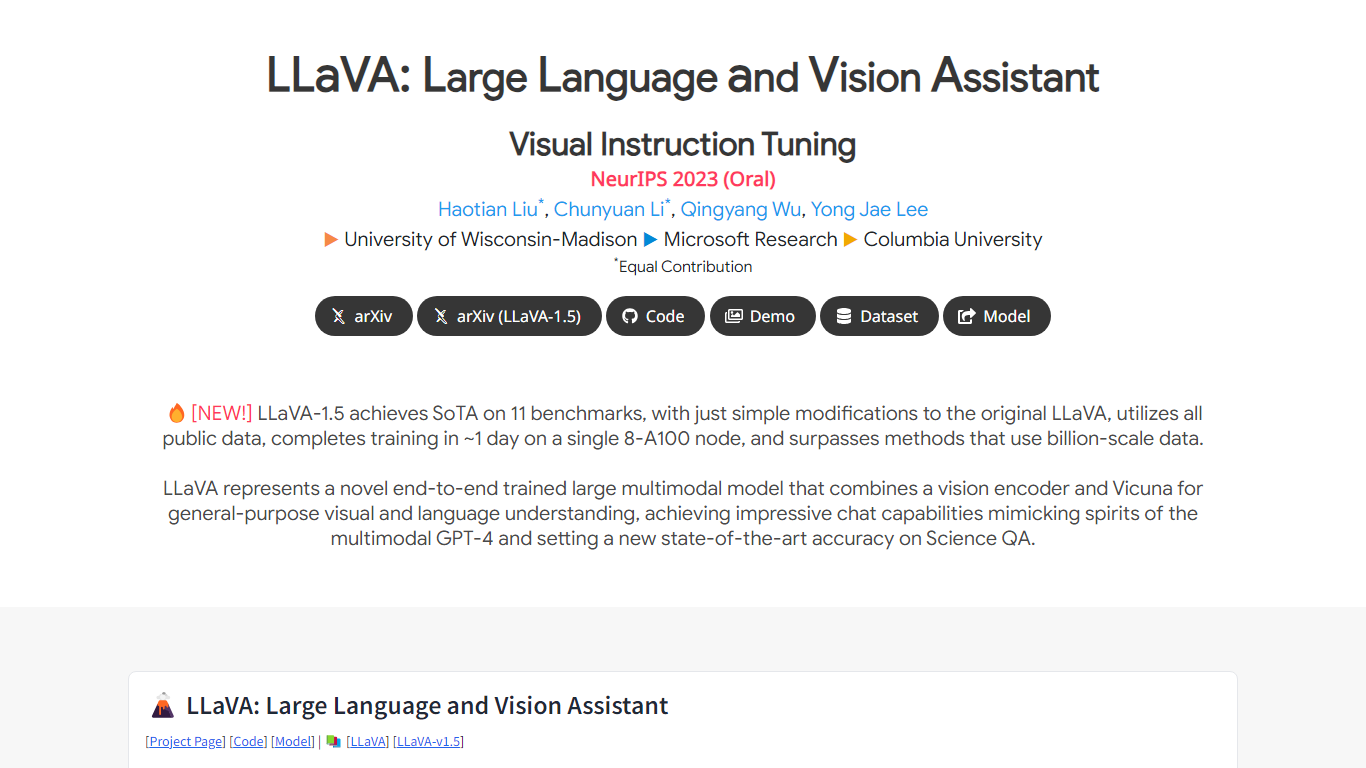

Discover the cutting-edge capabilities of **LLaVA**, the Large Language and Vision Assistant, featured at NeurIPS 2023 with an oral presentation. This remarkable tool integrates visual instruction tuning to enhance AI performance across various tasks. Dive into the collaborative works of Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee, esteemed researchers from the University of Wisconsin-Madison, Microsoft Research, and Columbia University. Each has equally contributed to this groundbreaking project.

With resources readily available, including preprints on arXiv and version updates like LLaVA-1.5, **LLaVA** offers an array of assets to the AI community. Users can access the source code, a live demo, comprehensive datasets, and the model itself. These invaluable tools serve as a backbone for researchers and practitioners looking to advance their projects in language and visual AI applications.

Top Features:

- **Collaborative Effort:** A joint project by renowned institutions University of Wisconsin-Madison Microsoft Research and Columbia University showcasing equal contribution from each researcher.

- **LLaVA 1.5 Updated Version:** Access to the latest improvements and features of the LLaVA model.

- **Comprehensive Resources:** Includes access to the project’s arXiv preprints source code demo datasets and model for community use and contribution.

- **NeurIPS Oral Presentation:** The project’s recognition and presentation at a prestigious AI conference highlights its significance in the field.

- **Visual Instruction Tuning:** A specialized focus on enhancing AI’s understanding of visual content in combination with language models.