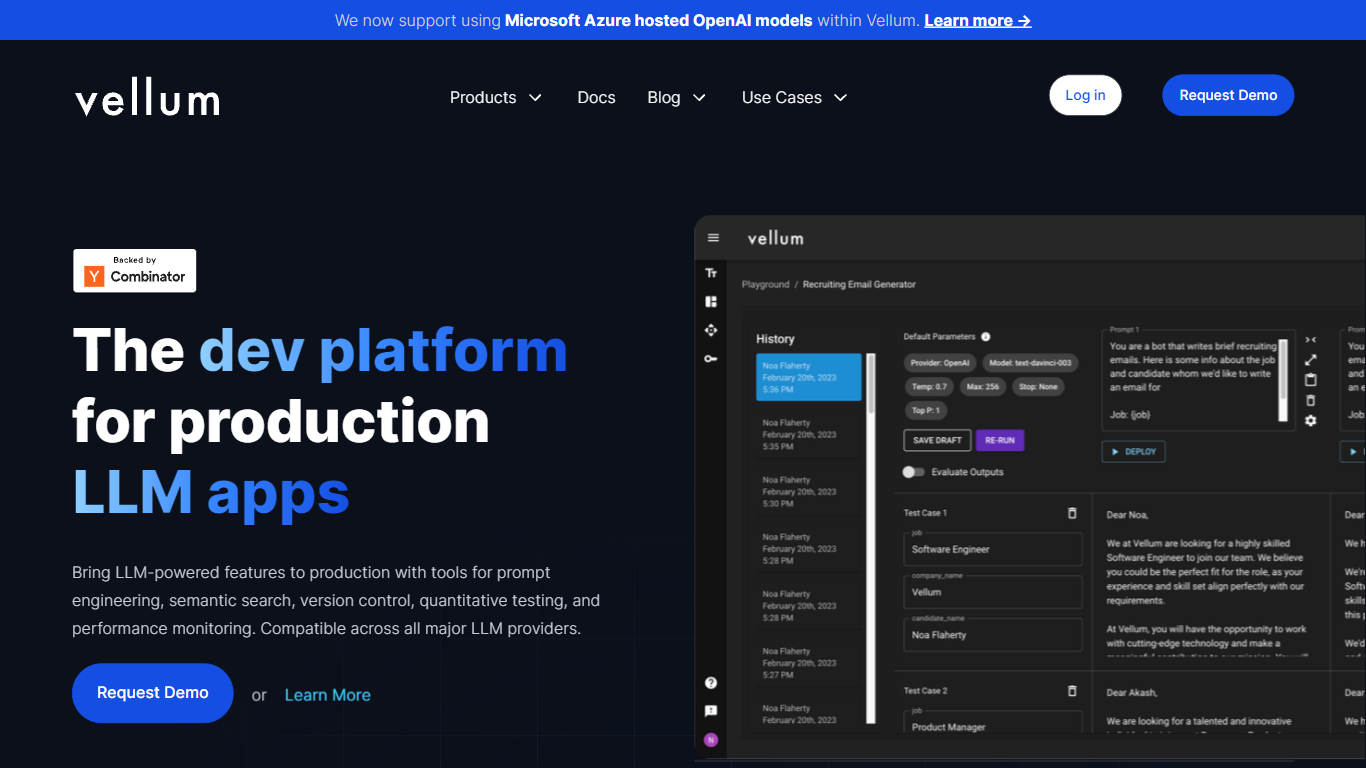

Vellum emerges as a cutting-edge developer platform dedicated to building and deploying large language model (LLM) applications with efficiency and scale. This robust platform offers an array of tools specifically tailored for prompt engineering, semantic search, version control, quantitative testing, and performance monitoring, making it a perfect fit for developers aiming to leverage the power of LLMs. The platform boasts compatibility with all major LLM providers, including the integration of Microsoft Azure-hosted OpenAI models, establishing a versatile and provider-agnostic environment for app creation.

Developers can indulge in rapid prototyping and iterate on their LLM applications with tools designed for testing and comparing prompts and models. Furthermore, Vellum supports deployment strategies that allow for testing, versioning, and monitoring of live LLM applications, ensuring they perform optimally in real-world scenarios. The platform’s workflow capabilities enable the building and maintenance of complex LLM chains, while its collection of test suites assists in evaluating and ensuring the quality of LLM outputs at scale.

For companies looking to innovate within their fields using AI, Vellum serves as a trusted partner to transition from prototype to production seamlessly, with enterprises benefiting from the platform’s streamlined API interface and observability tools. Customers praise Vellum for its ease of deployment, robust error-checking capabilities, and the ability to facilitate collaboration across diverse teams. Vellum positions itself as the go-to developer platform for those who seek to focus on delivering customer-centric AI applications without the overhead of complex AI tooling.

Top Features:

- **Prompt Engineering Tools:** Simplify the development of prompts with collaboration and testing tools.

- **Version Control System:** Efficiently track and manage changes in your LLM applications.

- **Provider Agnostic Architecture:** Choose from various LLM providers and seamlessly switch as needed.

- **Production-grade Monitoring:** Observe and analyze model performance with advanced observability.

- **Easy API Integration:** Integrate LLM applications with a simplified and low-latency API interface.